Karim Pichara

I'm currently an Associate Professor at the Computer Science Department from Universidad Católica de Chile and Research Assistant at the Institute of Applied Computational Science (IACS) from Harvard University. I'm also a researcher in the Millenium Institute of Astrophysics (MAS). I received a Ph. D. degree in Computer Science from Universidad Católica in Chile, 2010. During 2011-2012, I did a postdoc in Machine Learning for Astronomy, at Harvard University with professor Pavlos Protopapas. My main research areas are Data Science and Machine Learning for Astronomy, focusing in the development of several new tools for automatic and streaming classification of variable stars, detection of quasars, missing data algorithms, meta-classification, transfer learning, Bayesian Model Selection, Variational Inference and Deep Learning, among others. Besides my academic research, I'm the CTO and co-founder at NotCo, an innovative tech startup that is changing the way that food is made in the world.

Research Interest: Data Mining, Machine Learning, Astro-Statistics and Astro-Informatics

kpb@ing.puc.cl - kpichara@seas.harvard.edu

Pontificia Universidad Católica de Chile

School of Engineering

Computer Science Department

Assistant Professor

Data Mining

Approximate Bayesian Inference

Probabilistic Graphical Models

Advanced Python Programming

Publications

Plenary talk at ACAT 2016

Harvard-Chile students exchange program started

Presentation at the Semantic Web Seminar

Research

Our research is focused on Machine Learning and Data Science, mainly applied to the analysis of Astronomical Time Series. Our main interest is to develop automatic tools to classify objects in the Universe, based on information provided by telescopes. There are several important challenges to overcome, such as huge data processing algorithms, parallel programming, non structured and multivariate time series representation, intelligent integration of expert models, dealing with missing data, unsupervised representation, among others. Chile is one of the most attractive places to perform scientist research in Astronomy, given that most of the state of the art telescopes are installed in the North of the Country.

A Global Data Warehouse for Astronomy

This project is leaded by Javier Machin (Master Student). Javier is developing a Data Warehouse for Astronomy (today just for time domain astronomy), where we get together several (soon will be most of them) astronomical catalogs (OGLE, VVV, MACHO, EROS, Catalina Survey, Stripe 82, etc.), preprocessed, integrated, with visualization tools, visual querying tools, selection and integration tools, slicing and dicing capabilities, data sharing, machine learning models and lightcurve parameters extraction (FATS), among others. This project has been developed in collaboration with Professor Andrés Neyém.

Automatic Survey-Invariant Classification of Variable Stars

This project has been developed by Patricio Benavente (Master Student), in collaboration with Professor Pavlos Protopapas. Lightcurve datasets have been available to astronomers long enough, thus allowing them to perform deep analysis over several variable sources and generating useful catalogs with identified variable stars. The products of these studies are labeled data that enable supervised learning models to be trained successfully. However, when these models are blindly applied to data from new sky surveys their performance drops significantly. Furthermore, unlabeled data becomes available at a much higher rate than its labeled counterpart, since labeling is a manual and time-consuming effort. Domain adaptation techniques aim to learn from a domain where labeled data is available, the source domain, and through some adaptation perform well on a different domain, the target domain. We propose a full probabilistic model that represents the joint distribution of features from two surveys as well as a probabilistic transformation of the features between one survey to the other.

Time Series Variability Tree for fast light curve retrieval

This project has been developed by Lucas Valenzuela (Master Student). We propose a new algorithm and data structure to index astronomical lightcurves in order to provide astronomer with a fast lightcurve search in catalogs of millions of objects. We believe that this tool is very useful to perform manual exploration of catalogs, where astronomers need to find certain type of lightcurves, similar to some lightcurve they already have. Also, classification algorithms based on similarities can rely on this model, where instead of using traditional search methods they can use this indexing procedure and perform much faster.

A full probabilistic model for yes/no query type Crowdsourcing

This project has been developed by Belén Saldías (Master Student), in collaboration with Professor Pavlos Protopapas. Crowdsourcing has become widely used in supervised scenarios where unlabeled data is abundant and labeled data is hard to obtain and scarce. Despite there are several crowdsourcing models in the literature, most of them assume annotators can provide answers for standard complete questions. In classification contexts, complete questions mean that an annotator is asked to discern among all the possible classes. Unfortunately, that assumption is not always true in realistic scenarios. In this work we provide a full probabilistic model for a new type of queries where instead of asking complete questions to the labelers, we just ask queries that require a "yes" or "no" response.

Visualization tool for relevant patterns in Light Curves

This project has been developed by Christian Pieringer, in collaboration with Professors Márcio Catelán and Pavlor Protopapas. Current Machine Learning methods for automatic lightcurves classification have shown successful results, reaching high classification performance in known catalogs. Recently, visualization of time series is attracting more attention in machine learning as a tool to visually help experts for recognizing significant patterns in the complex dynamics of the astronomical time series. Inspired in dictionary-based classifiers, we present a method that naturally provides the visualization of salient parts on light curves. These classifiers code the relevant parts intrinsically assigning weights to each word in the dictionary according to their contribution in the signal approximation. Our approach delivers an intuitive visualization according to the relevance of each part in the time series. Results suggest the effectiveness of this method to highlight salient patterns. We also propose a pipeline that uses our method for observational time scheduling.

Automatic classification of poorly observed variable stars

This project has been developed by Nicolas Castro (Master Student), in collaboration with Professor Pavlos Protopapas. Automatic classification methods applied to sky surveys have revolutionized the astronomical target selection process. Most surveys generate a vast amount of time series or lightcurves. Unfortunately, astronomical data take several years to be completed, producing partial time series that generally remain without analysis until the observations are completed. This happens because state of the art methods rely on a variety of statistical descriptors or features that present an increasing degree of dispersion when the number of observations decreases, which reduces their precision. In this work we propose a novel method that increases the performance of automatic classifiers of variable stars by incorporating the deviations that scarcity of observations produces. Our method uses Gaussian Process Regression to form a probabilistic model of the values observed for each lightcurve.

Meta Classification for variable stars

This project has been developed by Karim Pichara, in collaboration with Professor Pavlos Protopapas and Daniel León. The need for the development of automatic tools to explore astronomical databases has been recognized since the inception of CCDs and modern computers. Astronomers already have developed solutions to tackle several science problems, such as automatic classification of stellar objects, outlier detection, and globular clusters identification, among others. New science problems emerge and it is critical to be able to re-use the models learned before, without rebuilding everything from the beginning when the science problem changes. In this paper, we propose a new meta-model that automatically integrates existing classification models of variable stars. The proposed meta-model incorporates existing models that are trained in a different context, answering different questions and using different representations of data. Conventional mixture of experts algorithms in machine learning literature can not be used since each expert (model) uses different inputs. We also consider computational complexity of the model by using the most expensive models only when it is necessary. We test our model with EROS-2 and MACHO datasets, and we show that we solve most of the classification challenges only by training a meta-model to learn how to integrate the previous experts.

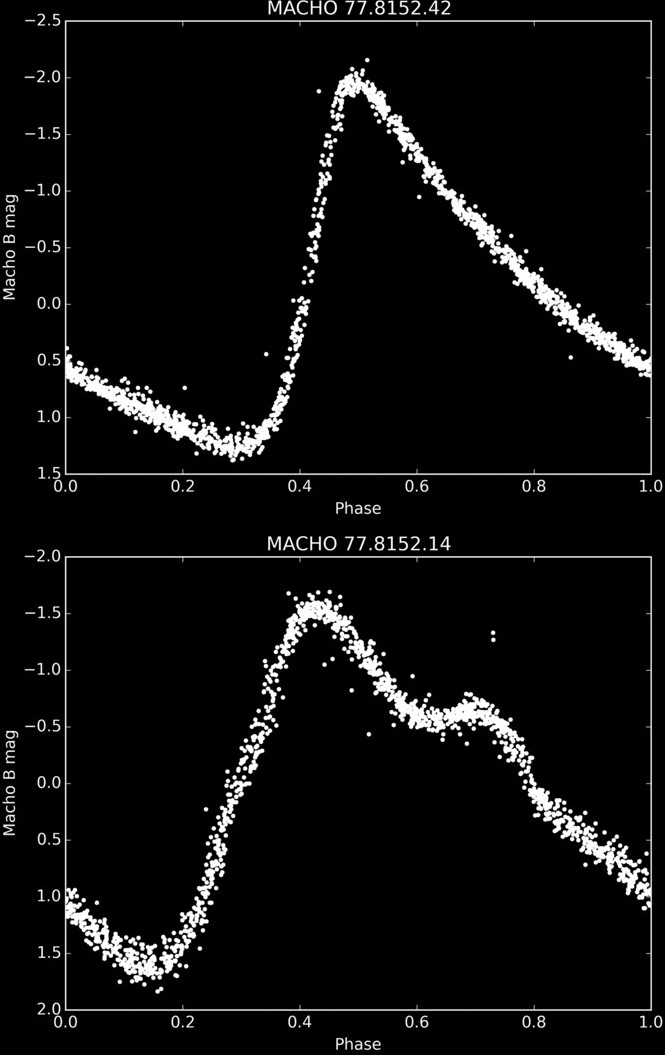

Clustering based feature learning on variable stars

This project was developed by Cristóbal Mackenzie, in collaboration with Professor Pavlos Protopapas. The success of automatic classification of variable stars strongly depends on the lightcurve representation. Usually, lightcurves are represented as a vector of many descriptors designed by astronomers called features. These descriptors are expensive in terms of computing, require substantial research effort to develop and do not guarantee a good classification. Today, lightcurve representation is not entirely automatic; algorithms must be designed and manually tuned up for every survey. The amounts of data that will be generated in the future mean astronomers must develop scalable and automated analysis pipelines. In this work we present a feature learning algorithm designed for variable objects. Our method works by extracting a large number of lightcurve subsequences from a given set, which are then clustered to find common local patterns in the time series. Representatives of these common patterns are then used to transform lightcurves of a labeled set into a new representation that can be used to train a classifier. The proposed algorithm learns the features from both labeled and unlabeled lightcurves, overcoming the bias using only labeled data.

Automatic classification of variable stars in catalogs with missing data

This project was developed by Karim Pichara, in collaboration with Professor Pavlos Protopapas. We present an automatic classification method for astronomical catalogs with missing data. We use Bayesian networks and a probabilistic graphical model that allows us to perform inference to predict missing values given observed data and dependency relationships between variables. To learn a Bayesian network from incomplete data, we use an iterative algorithm that utilizes sampling methods and expectation maximization to estimate the distributions and probabilistic dependencies of variables from data with missing values. To test our model, we use three catalogs with missing data (SAGE, Two Micron All Sky Survey, and UBVI) and one complete catalog (MACHO). We examine how classification accuracy changes when information from missing data catalogs is included, how our method compares to traditional missing data approaches, and at what computational cost. Integrating these catalogs with missing data, we find that classification of variable objects improves by a few percent and by 15% for quasar detection while keeping the computational cost the same.

Supervised detection of anomalous light-curves in massive astronomical catalogs

This project was developed by Isadora Nun, in collaboration with Professor Pavlos Protopapas. The development of synoptic sky surveys has led to a massive amount of data for which resources needed for analysis are beyond human capabilities. In order to process this information and to extract all possible knowledge, machine learning techniques become necessary. Here we present a new methodology to automatically discover unknown variable objects in large astronomical catalogs. With the aim of taking full advantage of all information we have about known objects, our method is based on a supervised algorithm. In particular, we train a random forest classifier using known variability classes of objects and obtain votes for each of the objects in the training set. We then model this voting distribution with a Bayesian network and obtain the joint voting distribution among the training objects. Consequently, an unknown object is considered as an outlier insofar it has a low joint probability. By leaving out one of the classes on the training set we perform a validity test and show that when the random forest classifier attempts to classify unknown lightcurves (the class left out), it votes with an unusual distribution among the classes. This rare voting is detected by the Bayesian network and expressed as a low joint probability. Our method is suitable for exploring massive datasets given that the training process is performed offline.

Fondecyt Projects